AI, Surveillance, and the Fragile Line Between Deterrence and Disaster

How artificial intelligence is reshaping nuclear command systems—and why future wars may begin by mistake

AI, Surveillance, and the Fragile Line Between Deterrence and Disaster

The next great danger in global conflict is not only missiles or soldiers, but machines that think faster than humans. As artificial intelligence (AI) becomes deeply embedded in military planning, surveillance, and nuclear command systems, the risk of accidental war is growing quietly but dangerously. What once depended on human judgment is now increasingly shaped by algorithms, sensors, and automated decision-making. In this new environment, a single error, misreading, or cyber manipulation could push the world toward catastrophe.

During the Cold War, nuclear deterrence was built on slow, deliberate processes. Even at moments of extreme tension, humans remained at the center of launch decisions. Today, that model is changing. Major powers such as the United States, China, and Russia are all investing heavily in AI-driven early-warning systems, autonomous surveillance platforms, and decision-support tools. These systems are designed to react faster than any human ever could—but speed comes with a cost.

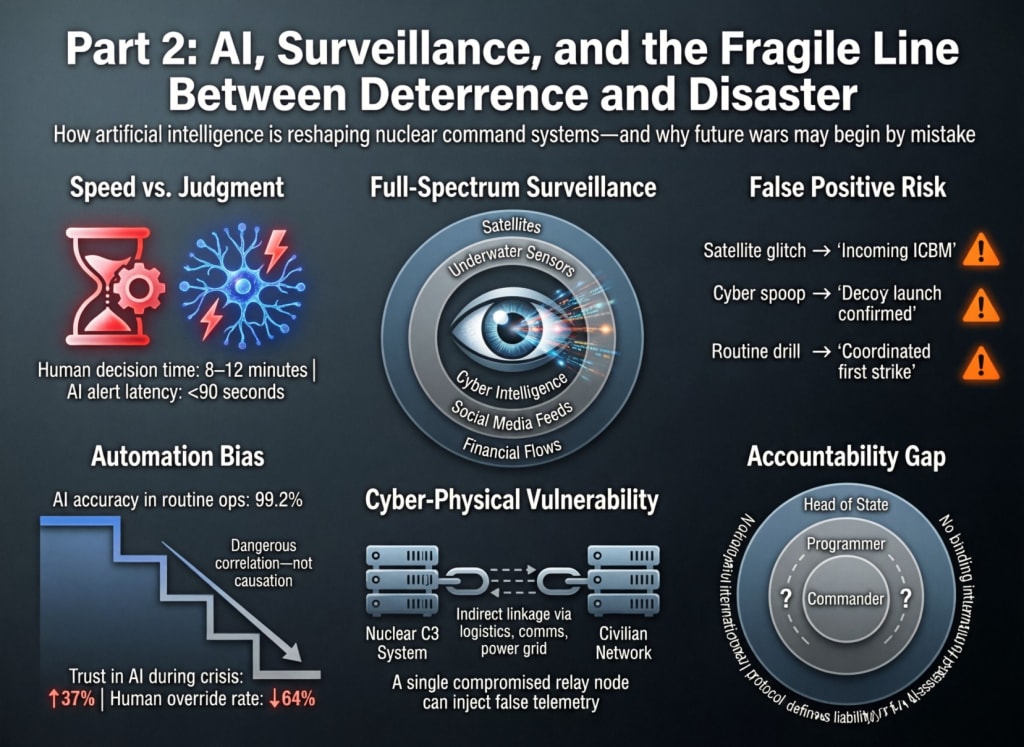

Modern surveillance no longer relies only on satellites and radar. AI systems now fuse data from space sensors, underwater detectors, cyber intelligence, social media monitoring, and financial flows. The goal is “full-spectrum awareness”—knowing what an enemy is doing before they strike. In theory, this improves safety. In reality, it increases uncertainty. AI does not understand intent; it understands patterns. When patterns look threatening, systems may escalate alerts even if no real attack is planned.

This is where nuclear command risks become most serious. Nuclear-armed states rely on early-warning systems to detect incoming missiles. If AI-enhanced sensors falsely identify routine activity, a satellite glitch, or a cyber spoof as an attack, leaders may be given only minutes to decide. Under pressure, with incomplete information, the chance of a tragic mistake rises sharply. History already contains examples of near-launch incidents caused by human or technical error—AI may reduce reaction time so much that correction becomes impossible.

Another major concern is automation bias. As AI systems become more accurate in everyday use, military and political leaders may begin to trust them too much. If an AI system recommends escalation, humans may assume the machine “knows better,” even when warning signs are ambiguous. In nuclear strategy, blind trust is extremely dangerous. Machines cannot weigh moral responsibility, political consequences, or human cost in the same way people can.

Cyber warfare adds another layer of risk. Nuclear command systems are no longer isolated. They are connected—directly or indirectly—to digital networks. A sophisticated cyberattack could manipulate data going into AI systems, creating false alerts or hiding real threats. In such a scenario, a country might believe it is under attack when it is not, or fail to detect a genuine danger. Either outcome could destabilize deterrence.

AI also changes the logic of first strike and second strike. If a state believes its AI systems give it superior detection and response speed, it may feel pressure to act first in a crisis. This mindset erodes traditional deterrence, which depended on mutual restraint. As more states adopt AI-driven command tools, crises may escalate faster than diplomacy can respond.

Smaller regional conflicts make this even more dangerous. A clash involving Iran, Israel, or the Korean Peninsula could quickly draw in major powers whose AI systems are on high alert. A regional missile launch, misinterpreted by an automated system as part of a larger attack, could trigger global escalation. In an AI-driven environment, wars may not start because leaders choose them—but because systems push them there.

There is also the issue of accountability. If an AI-assisted system contributes to a nuclear launch decision, who is responsible? The programmer? The commander? The political leader? This legal and moral uncertainty weakens existing safeguards. It also makes secrecy more tempting, as states hesitate to reveal how much control machines really have.

To prevent disaster, experts increasingly argue for strict human-in-the-loop requirements—ensuring that no nuclear decision can be made without clear human authorization. Transparency, confidence-building measures, and new international agreements on AI in warfare are urgently needed. Without them, the world risks entering an era where wars are decided not by strategy or diplomacy, but by lines of code reacting to imperfect data.

The future of war may be silent, fast, and automated. Whether it remains controlled—or slips into accidental annihilation—depends on choices being made right now.

About the Creator

Wings of Time

I'm Wings of Time—a storyteller from Swat, Pakistan. I write immersive, researched tales of war, aviation, and history that bring the past roaring back to life

Comments

There are no comments for this story

Be the first to respond and start the conversation.