What AI Can’t Learn from Data: African Ethics in a Digital Age

Centering human values in an increasingly artificial world

I didn’t grow up thinking about artificial intelligence. I grew up thinking about fairness. In classrooms, courts, street corners, and songs — fairness wasn’t just an abstract idea. It was personal. It meant something when someone was overlooked, misjudged, or discarded. It had a smell, a tone of voice, a lived weight.

Now, the world is building machines that make decisions once left to humans. Who gets hired. Who gets loaned money. Who gets flagged as suspicious. And I keep wondering: Can a machine be taught what fairness feels like?

AI Sees Patterns. It Misses Pain

Let’s be honest — most AI systems aren’t malicious. But they are deeply limited. They don’t understand why someone might avoid a bank. Or how being misnamed by a system becomes more than a technical error. They process probability, not history. Prediction, not experience.

They replicate whatever the data tells them is “normal.” But who decides what normal is? In many places, including across Africa, “normal” has often meant being excluded. Misread. Misrepresented. And now those same patterns are being baked into digital systems — but with a cloak of objectivity.

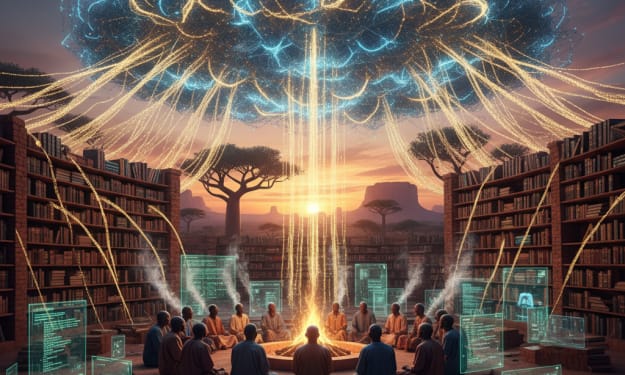

If Ubuntu could speak to AI, it would say: “You don’t understand us because you only measure us.” Ubuntu isn’t a relic. It’s a living ethic. It means identity is not a solo performance — it’s a choir. It means morality grows in how we relate, not just how we compute. Imagine if AI had to pass a test, not only for speed or accuracy, but for humility.

What would that look like? An algorithm that pauses when context is missing. A system that doesn’t pretend to be neutral when it’s not. A chatbot that doesn’t just give you answers but asks how you’re doing. These sound poetic. But maybe that’s the point. Ethics isn’t only about logic. It’s also about poetry. And pain. And memory.

Africa Isn’t a Tech Desert. It’s an Ethical Archive

We’re often told that Africa is “catching up” to global innovation. But I’d argue: We’re holding a kind of wisdom the world is only starting to need. Long before predictive algorithms, African societies used storytelling, elders, rituals, and moral imagination to guide choices. Not always perfectly — but always with people in mind.

We didn’t separate reason from emotion. We didn’t pretend choices could be “clean” or purely rational. That’s the kind of ethical grounding AI needs right now. Not more efficiency. More accountability. Not just smarter systems. Wiser ones.

I’m not anti-tech. I’m anti-amnesia. When we forget the people behind the data, or the stories beneath the numbers, we risk creating machines that feel powerful — but are blind to the very things that make us human. Africa has something to say about this. And it’s not a whisper. It’s a declaration: “You are not your data. You are your dignity.” If AI is to serve the world, it must learn to see us whole.

I write about AI and ethics not because I fear machines — but because I fear forgetting what we owe each other. Before we automate morality, we must remember the slow, painful, beautiful way real morality is made together.

About the Creator

David Thusi

✍️ I write about stolen histories, buried brilliance, and the fight to reclaim truth. From colonial legacies to South Africa’s present struggles, I explore power, identity, and the stories they tried to silence.

Comments (1)

Good starting,keep it up